Openai Partial Stream

Turn a stream of token into a parsable JSON object as soon as possible. Enable Streaming UI for AI app based on LLM.

Overview

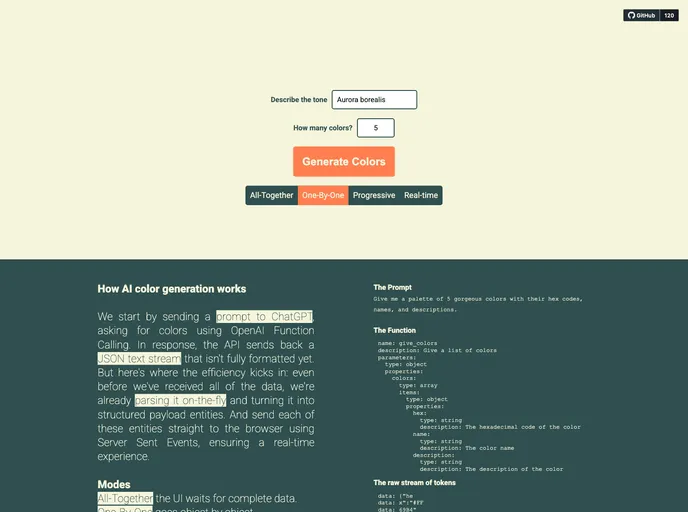

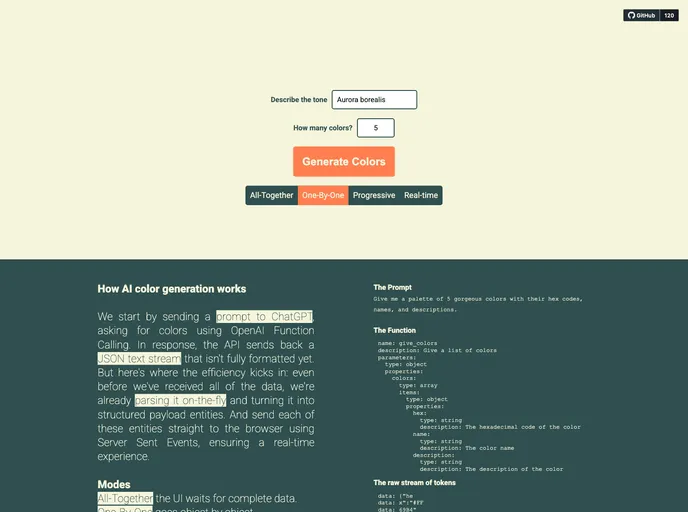

In the fast-evolving landscape of AI applications, achieving real-time engagement with users is crucial. The ability to parse a stream of tokens into a cohesive JSON object before the stream concludes can significantly enhance user experience. This technology allows developers to implement responsive and dynamic user interfaces in LLM-based applications, making it easier to deliver timely and relevant content to users as it becomes available.

Whether you're looking to streamline your data handling or create engaging user experiences, leveraging features like OpenAI Function Calling and distinguishing multiple entities from JSON streams opens up new dimensions for app development. With the right tooling, developers can transform slow-loading applications into interactive experiences, ensuring users remain engaged throughout their interaction.

Features

-

Real-Time Parsing: Convert a stream of tokens into a parsable JSON object, enabling immediate data usage before the stream ends.

-

Streaming UI Implementation: Build responsive user interfaces that refresh content in real time, enhancing user engagement and interactivity.

-

OpenAI Function Calling Integration: Utilize advanced function calling capabilities for efficient early stream processing, improving overall application responsiveness.

-

Entity Extraction: Seamlessly parse JSON streams into distinct entities for more structured data handling and user interaction.

-

Flexible Streaming Modes: Choose from various streaming modes, including No Stream, Stream Object, and Key Value streams, to suit your application's specific needs.

-

Schema Validation: Validate incoming data against a predefined schema, ensuring that only accurate data is returned and processed.

-

Enhanced User Experience: Reduce waiting times and network traffic by delivering information in a way that keeps users engaged and informed.

-

Dynamic Updates: Offer incremental updates through token-by-token delivery, allowing users to interact with the application as new data arrives.